Combining Google Speech-to-Text and OpenAI for Transcription and Summary

I was first introduced to Bitcoin circa 2015 when I got a gig transcribing Bitcoin podcasts (Let's talk Bitcoin) for 0.5 bitcoin each.

My typing speed is above average so it didn't take too long to transcribe the roughly hour-long podcast at 2x speed. Some days were better than others - some guests with exotic accents made me spend precious time rewinding again and again to catch that one word.

Funnily, in the first two transcriptions I did, I only got a very small amount of $50 each because some industrious fellow got the gigs for himself then outsourced them to me and kept the difference as the middleman. I started searching around, found the actual gig and the rest is history.

I shared this anecdote because, in this post, I will be revisting that gig of mine. It's been a while since then, and I must admit that my typing speed has diminished somewhat. Therefore, it's possible that completing the task now might take me a bit longer than before.

Let's get started. Here are the requirements:

- A Google Cloud Account with the Speech-To-Text API enabled. You can enable it by visiting https://console.cloud.google.com/speech.

- A Google service account key in JSON format.

- An OpenAPI key.

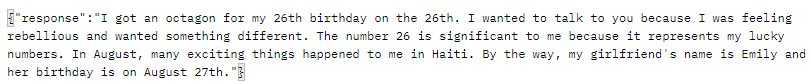

Now, let's discuss our objective in simple terms. We will begin by retrieving our audio source, which, in this case, is Youtube Shorts in MP3 format. Next, we will utilize Google Speech to Text to transcribe the audio for us. Finally, we will pass the transcribed text to ChatGPT to refine and perform any additional operations as needed.

The end goal

At the end of this article, you will have a nodejs server that can take a Youtube Shorts URL and give you:

But before diving into the code, let's make sure we have all the necessary dependencies installed. Please follow the steps below:

1. Install the youtube-dl-exec package by running the command:

npm install -g youtube-dl-exec2. Install the axios, express, and @google/cloud-speech packages by running the following command:

npm install axios express @google/cloud-speechOnce the installation is complete, we'll be ready to proceed with the code.

Fetching youtube shorts as MP3

A key constraint of the Google Cloud Platform (GCP), when transcribing audio content uploaded directly to the Speech-To-Text API, is that the audio must not exceed one minute in length. This means that if our audio source is longer than one minute, we'll need to truncate or split it into shorter segments before proceeding with the transcription process.

Another option, if the audio duration extends beyond one minute, is to first upload the audio to Google Cloud Storage (GCS). For this reason, the example below only works with Youtube Shorts as our source material.

async function fetchSource(videoURL) {

if (!videoURL) {

res.status(400).send('Please provide a valid YouTube video URL.');

return;

}

const command = `yt-dlp -x --audio-format mp3 ${videoURL} --output assets/audio.mp3 --no-overwrites`;

exec(command, async (error, stdout, stderr) => {

if (error || stderr) {

res.status(500).send('An error occurred during conversion.');

return;

}

});

}fetchSource() is responsible for fetching the audio source from a given YouTube video URL. Here is a summarized overview of what the code does:

- The function constructs a command string using the

yt-dlpcommand-line tool to download the audio from the specified video URL. It specifies the output format as MP3 and the output file path asassets/audio.mp3. - The

exec()function is used to execute the cli command. Upon completion, it either returns an error message or proceeds with the transcription process by invoking thetranscribeAudio()function.

Transcribing audio using Google Text to Speech

async function transcribeAudio() {

const client = new SpeechClient({

keyFilename: 'service-account.json',

});

const audioFile = 'assets/audio.mp3';

const audio = {

content: fs.readFileSync(audioFile),

};

const config = {

encoding: 'MP3',

sampleRateHertz: 16000,

languageCode: 'en-US',

};

const request = {

audio: audio,

config: config,

};

const [operation] = await client.longRunningRecognize(request);

const [response] = await operation.promise();

const transcription = response.results

.map(result => result.alternatives[0].transcript)

.join('\n');

return transcription;

}The transcribeAudio() function utilizes the @google/cloud-speech package to transcribe audio files. It reads the audio file, configures the speech recognition request, sends the request to the Speech-to-Text API, retrieves the transcriptions, and returns them as a result.

Cleaning up the response with ChatGPT

async function callOpenAI(str) {

const apiKey = OPEN_AI_API_KEY;

const endpoint = 'https://api.openai.com/v1/chat/completions';

const prompt = str;

const response = await axios.post(endpoint, {

'model': 'gpt-3.5-turbo',

'messages': [

{

'role': 'system',

'content': 'As a transcription bot, your primary objective is to clean up and summarize transcribed audio. After receiving the transcribed text, your task is to employ text cleaning techniques to enhance its accuracy and readability. This involves removing filler words, correcting mispronunciations, fixing grammatical errors, and ensuring proper punctuation. Once the text is cleaned, your next step is to generate a concise summary of the transcript using extractive summarization or keyphrase extraction methods. The generated summary should capture the essential information and provide an overview of the transcribed audio. Aim to strike a balance between brevity and preserving the key details.'

},

{

'role': 'user',

'content': prompt

}

]

}, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`

}

});

const reply = response.data.choices[0].message.content;

return reply;

}The callOpenAI() function interacts with the OpenAI API for chat completion. It takes a string prompt as input and performs the following steps:

- Sets the API key and the endpoint URL for the OpenAI API.

- Constructs a prompt message to provide context for the conversation with the AI model. This particular prompt explains the objective of a transcription bot, which is to clean up and summarize transcribed audio. It describes the tasks involved in cleaning the text and generating a concise summary.

- Makes a POST request to the OpenAI API endpoint, passing the model name (

gpt-3.5-turbo) and the messages array containing the system and user messages. - The response from the API is retrieved, and the content of the AI model's reply is extracted.

- The reply content is returned as the output of the

callOpenAI()function.

Combining all of it

Instead of calling the script once, we can create an express server by combining all of the above. We can then call localhost:3000/convert?url=<url-here> using Postman to repeat this process.

Here's a summary of what we've done:

- We set up an Express server and defines a route

/convertthat handles the YouTube video conversion and audio transcription. - Upon accessing the

/convertroute with a valid YouTube video URL, the code uses theyt-dlpcommand-line tool to download the audio in MP3 format. It then proceeds with the transcription process using Google Cloud Speech-to-Text. - The transcribeAudio() function utilizes the

@google-cloud/speechpackage to transcribe the downloaded audio file. It configures the Speech-to-Text request and retrieves the transcription results. - The callOpenAI() function uses the OpenAI API to generate a response by providing a prompt message and the transcribed text. It aims to create a concise summary of the transcription while preserving key details.

- The application uses Axios to make an HTTP POST request to the OpenAI API, passing the necessary data for the conversation model.

- Upon receiving the OpenAI response, the server sends the generated reply back as a JSON object.

const express = require('express');

const { exec } = require('child_process');

const fs = require('fs');

const { SpeechClient } = require('@google-cloud/speech');

const axios = require('axios');

const app = express();

const port = 3000;

app.get('/', (req, res) => {

res.send('Welcome to the YouTube to MP3 Converter!');

});

app.get('/convert', (req, res) => {

const videoURL = req.query.url;

if (!videoURL) {

res.status(400).send('Please provide a valid YouTube video URL.');

return;

}

const command = `yt-dlp -x --audio-format mp3 ${videoURL} --output assets/audio.mp3 --no-overwrites`;

exec(command, async (error, stdout, stderr) => {

if (error) {

console.error(`Error: ${error.message}`);

res.status(500).send('An error occurred during conversion.');

return;

}

if (stderr) {

console.error(`Error: ${stderr}`);

res.status(500).send('An error occurred during conversion.');

return;

}

console.log('Conversion completed successfully!');

console.log(stdout);

const response = await transcribeAudio().catch(console.error);

const openAI = await callOpenAI(response)

res.send(JSON.stringify({ response: openAI }));

});

});

app.listen(port, () => {

console.log(`Server is listening on port ${port}`);

});

async function transcribeAudio() {

const client = new SpeechClient({

keyFilename: '_private/homelab-key-service-account.json',

});

const audioFile = 'assets/audio.mp3';

const audio = {

content: fs.readFileSync(audioFile),

};

const config = {

encoding: 'MP3',

sampleRateHertz: 16000,

languageCode: 'en-US',

};

const request = {

audio: audio,

config: config,

};

const [operation] = await client.longRunningRecognize(request);

const [response] = await operation.promise();

const transcription = response.results

.map(result => result.alternatives[0].transcript)

.join('\n');

return transcription;

}

async function callOpenAI(str) {

const apiKey = OPEN_AI_API_KEY;

const endpoint = 'https://api.openai.com/v1/chat/completions';

const prompt = str;

const response = await axios.post(endpoint, {

'model': 'gpt-3.5-turbo',

'messages': [

{

'role': 'system',

'content': 'As a transcription bot, your primary objective is to clean up and summarize transcribed audio. After receiving the transcribed text, your task is to employ text cleaning techniques to enhance its accuracy and readability. This involves removing filler words, correcting mispronunciations, fixing grammatical errors, and ensuring proper punctuation. Once the text is cleaned, your next step is to generate a concise summary of the transcript using extractive summarization or keyphrase extraction methods. The generated summary should capture the essential information and provide an overview of the transcribed audio. Aim to strike a balance between brevity and preserving the key details.'

},

{

'role': 'user',

'content': prompt

}

]

}, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`

}

});

const reply = response.data.choices[0].message.content;

return reply;

}Back to the story

Well, that didn't take nearly as long as I feared it would.